10 years ago

13

Musk, Wozniak and Hawking urge ban on warfare AI and autonomous weapons

Over 1,000 high-profile artificial intelligence experts and leading researchers have signed an open letter warning of a “military artificial intelligence arms race” and calling for a ban on “offensive autonomous weapons”. The letter, presented at the International Joint Conference on Artificial Intelligence in Buenos Aires, Argentina, was signed by Tesla’s Elon Musk, Apple co-founder Steve Wozniak, Google DeepMind chief executive Demis Hassabis and professor Stephen Hawking along with...

Continue Reading

Additional Contributions:

Join the Discussion

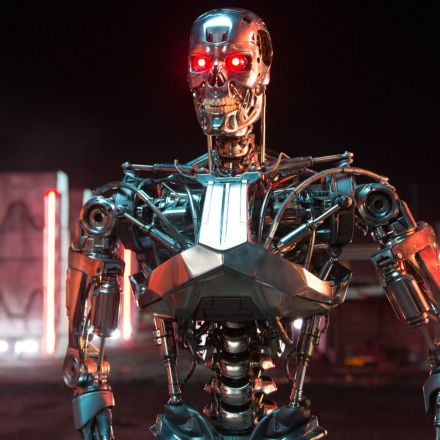

This letter is the one we will look back on when our robot overlords have forced us underground and we will wonder why we didn't listen.

Seriously though they raise some very good concerns that without human emotion behind it more killings would most likely happen. Just look at the disconnect with drones at the moment. I image it could be much worse with an AI at the controls.

Banning weaponized AI today is like banning weaponized nuclear technology back in the early 90's when the cold war ended and it became apparent that nothing good will ever come from an all out nuclear war. I think this has the potential to be 100x times worse, and if anything was to happen it would be certain death for most if not all of mankind.

The most worrisome fact for me is that even if the classic sense of artificial intelligence isn't the culprit, the tech can get into the hands of the super rich and powerful. I strongly believe we can have an "Elysium" type scenario happening here on earth. Can you imagine trying to defeat an AI powered drone with nothing but a rifle? These things will have automatic aim, millisecond decision making and generally insane damage avoidance systems. I'm getting carried away here it seems.

Also, they have the potential to be the size of a small bird and very agile. A quad-copter with a 22LR gun on it with perfect aim is a pretty terrifying idea.

More terrifying I think is that that sort of tech will be easily accessible to everyone, not just the rich and powerful.

And then you get to weaponized nano...and you have a pervasive, unstoppable swarm of machines.

I agree. The sooner the ban would happen the better the position we would be in. If we allow it to continue where one or a few countries get it we would be in the same position as we are today with nuclear weapons.

Do you think Kim Jong Un would love to have his own killer robot army that would patrol the streets (and houses) and, ahem, terminate any pesky thought-criminals?

Of course he would, just like any other rulers. So the problem here is that the organizations Musk & co are asking to ban "autonomous weapons" are precisely the ones that want to have them the most. Do you think governments might just go ahead and build robot armies for themselves as soon as they can?

Can you guess what's going on here: https://www.youtube.com/watch?v=NtU9p1VYtcQ ?

What about this: https://en.wikipedia.org/wiki/Atlas_%28robot%29 -- "developed by the American robotics company Boston Dynamics, with funding and oversight from the United States Defense Advanced Research Projects Agency (DARPA)" -- Defense? Search and rescue? -Search, definitely, but rescue? -Not so much..

I wonder why A.I and it's threats are not more talked about in public these days. We should be having a public debate about this, and a great one.

It's not like a serious debate yet but it's certainly talked about often and at great length. We consume vast amounts of media on that very subject everything from Space Odyssey, matrix, terminator A.I., Blade Runner, Almost Human (cancelled far too soon), Ultron and the Sentinels are the classic Marvel insight into the situation (i'm sure DC has their own versions too i'm not as familiar with their canon). We have reams and reams of books exploring the subject both as fiction and as ethical analysis.

The problem is that, like zombies or nuclear apocalypse, it's too far in the future for the collective mind of humanity and is dismissed and relegated to "pub chat" or geeky domain. People now, in general, aren't willing to take the prospect seriously enough. Even with drones delivering precision bombs to civilian spaces, and to be fair governments are doing a great job waving around real people in our faces, showing us all those soldiers coming back from combat zones and telling us "oh aren't they heroes!" We're not focussing on their equipment because the media doesn't talk about it generally. Only dedicated activists and interested techheads really keep score.

The rest of us still think this is a problem for a few generations down the line. It's fine, we're safe. We'll never have a drone fly over our town carrying enough.. Whatever.. to kill everyone in a 10 mile radius.

Hell, I live in Scotland and the amount of people that until last year didn't even know we have a nuclear sub situated in our largest population centre that could kill absolutely every last fucker in this country through blast zone or fallout areas was, in a word, flabbergasting. Ask anyone, that shit happens elsewhere. Not here. Never here.

I have seen video's of some of the AI weapons. Its pretty unnerving stuff. How in the world would a person or group of people ever be able to defend themselves against something like that. AI's are just machines. And machines go on the fritz all the time. I agree this needs to be stopped now.

https://www.youtube.com/watch?v=wE3fmFTtP9g

the above video is what im talking about. That thing is creepy!

It amazes me to no end that we can create such amazing things for such base and petty purposes. Teaching a computer to think and probably a few decades down the line create something close autonomous consciousness, then taking this and using just to make others miserable.

AI weapons will have to be tested and the first one to go rogue and kill its master will probably spell the end of the AI program. Additionally, the machine would need to kill AND reproduce, something that's not a few years away.

About a hundred years ago, it was the use of Large battleships

About 75 years ago it was TNT and Chemical Warfare

About fifty years ago, it was atomic weapons, the hydrogen bomb, and nuclear weapons.

Now, its AI Warfare.

Woz and Hawking, I really care nothing about. Hawking is a physicist not an AI expect. Woz certainly has computer cred, but he's been edging toward SJW status, that's frankly annoying.