11 years ago

23

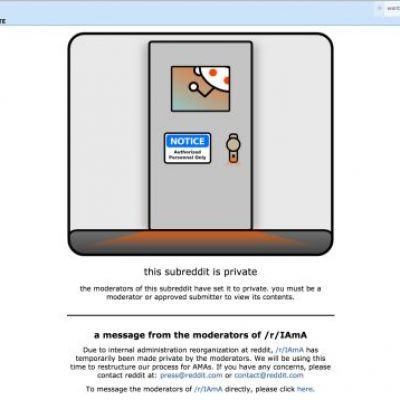

Reddit Admins have banned /r/thefappening for "linking to stolen content" after receiving erroneous DMCA takedown notices.

Continue Reading

Additional Contributions:

Join the Discussion

If celebs cry about it, shit gets done. Reddit has tons of other nasty places where they share some disgusting stuff, yet no one bats an eye.

They also did it because it gave them bad press from the media, which is never a good thing. I'm sure if this other stuff got the medias eye (and was important), maybe the same thing would happen?

What kind of nasty places?

Like jedlicka mentioned, subreddits like photoplunder, picsofdeadkids, cutefemalecorpses, sexyabortions, candidfashionpolice, beatingwomen, and all other sorts of horrible things. I'm sure even just reading the names of these places, you can get the gist of just how shitty parts of Reddit can be.

I agree with troople, Reddit is seriously in trouble. Once the media really gets a grasp of all these different outlets of horrible content, it's going to be all downhill from there. It happened before with /r/jailbait and it will happen again.

It just gets sleazier and sleazier.

They have subforums where people share stolen nude pics or regular people (in which they think is fine if the name isnt revealed). They also share pics of dead people, kids, etc. It's extremely disturbing.

Honestly, I'm more disappointed that reddit caved to the pressure and banned the sub than I am that "shock" subreddits exist. You either have free speech or you do not.

The thing is... How far should an organization go to protect free speech? Is there a point where we as a community should draw the line? Some content IS distasteful, but there are types of content that is borderline illegal, and it actually hurts people with every share. Hmm, this isn't a simple matter.

I think everything that directly hurts someone is out of the question. Your freedom ends where the other person's begins, but everyone's equal. So these celebrities don't have the right to have their photos taken down any more than anyone else who had their photos stolen and posted on the internet, and the opposite counts too. If they do have those rights, why don't the subreddits containing stolen photos get deleted? But what's bad isn't that they've taken the subreddit down, it's that they've taken it down supposedly for moral reasons while a bunch of other women are victims of it and nobody cares.

But I don't get what the fuss is about with these leaks, maybe just a bunch of horny nerds pissed that the photos are a bit harder to find (even though they're all over porn sites). The best example of free speech violated by Reddit's admins is the Zoe Quinn scandal where the mods just mass banned every conversation on it and refused to spread the information. Including these informations are proof of how corrupt the gaming journalism industry is, and how indie gaming developers can easily cheat their way into the industry. It's funny to me how the bans and the censorship were in favour of Zoe, following the role of victim she tries to have to public eyes, blaming everything on 4chan or something to hide the truth.

I'm just rambling. In conclusion, free speech doesn't exist on reddit for moral reasons but for image reasons. They don't care about morals, they just care about Reddit's image, but I personally think everyone, no matter how fucked up they are, should be able to have their own community on a website as long as they don't hurt other people directly, and we have to understand that once something is on the internet, it won't get out, so there's no use in censoring it, as long as they're wanted they'll be shared, you just have to accept it. In my opinion, what we should do is not worry about sharing the pics, it's about punishing the hacker, who's the real ...

Read FullI 100% percent agree. Selected censoring only proves further that the admins behind reddit did it for image and self preservation and not because they felt the urgency to do it out of empathy towards the people being hurt by the material.

I'm hurt by republican rhetoric and religious dogma. Can I request that all such talk be removed? Illegal content aside, you just can't draw a line between what's acceptable and what's not. You do not have the right to not be hurt.

With reddit, it's important to note the difference between admin actions and mod actions. The admins are there to run a website and enforce it's handful of rules. Moderators are there to enforce their own agenda, and yes some of them will openly censor opinions they don't agree with. While it's no way to maintain a community, it's within the mods every right to do so. It's their community.

Ultimately, it seems admin decisions (if they'll admit it or not) comes down to how much time an issue is taking up. /r/thefappening was suddenly a hotbed of activity and forced the admins to deal with constant (albeit misdirected) DCMA takedown notices. It's only 1 of tens of thousands of subreddits, I can understand why they made their decision. The same thing happened with /r/jailbait after the Anderson Cooper story: it was banned not because of its content but because some users were constantly posting childporn and the mods failed to prevent it.

Directly hurt, that doesn't mean offended. But I see your point and I agree that these kinds of situations must be a pain for admins. But as I said, these are what people cry out about but they're not the worst they've done, the Zoe Quinn scandal and its censorship of even peaceful conversations about the subject is what made me angry at them and why I stopped using it.

And that's the only I'm aware of, who knows if it's the only subject they've censored.

I don't know anything about the "Zoe Quinn scandal" but I assume that any censorship was done by the mods of that subreddit and not the admins. The only thing close to censorship the admins have done is banning entire subreddits or accounts. They're not subtle about it.

There are proofs that 2 admins were shadowbanning them. There are these images that show the person asking for a justification, then the admin just tells them 4chan raid. Then the person said but I don't even go on 4chan and the admin just responds vote manipulation. And they deleted entire threads too and even banned people for upvoting these threads. Even a mod on /r/games got demoted because of it.

It's really weird, they just censored the whole thing, and there are solid proofs. I'll post the imgur album if I find it.

Here: https://imgur.com/a/f4WDf

Does anyone know what the policy of this website is regarding stuff like this? What would happen if someone were to start a tribe like photoplunder or picsofdeadkids?

The terms of use are short and clear, so you could try reading through them quickly. It doesn't look like there's anything wrong with it, except there are two lines that would give Snapzu the right to delete them.

By using Snapzu, you agree not to make available content that is threatening, harmful, unlawful, abusive, harassing, or libelous. (That shouldn't be a problem)

Snapzu does not take any responsibilities for any content posted from subscribers, and reserves the rights to remove any content at any time, or suspend any services at any time without prior notice. So this says if an admin doesn't agree with that, he can delete it. I could see that happen (and wouldn't mind) with picofdeadkids because I wouldn't want that on my website, but that depends on the purpose.

Otherwise they make it clear that you're responsible for both the content you share and view, and it's fine as long as it's not illegal or harassing to another user.

The thing is that reddit has (or at least had at one point) rules against stuff like this, they just don't enforce them. So unless some admin actually said "we're gonna actively remove content like this" we don't know what their policy is going to be.

Personally I believe that there is nothing gained (and a lot lost) in allowing such content.

It really depends on the content and the purpose of it. In my opinion, there is nothing lost in allowing that content if some people want it enough to build a community around it. What's fun about reddit is how the ease of making a subreddit opens door for any community to settle in their own little part of the internet, and as long as they don't hurt anyone, I think they should be able to stay. But I'm not talking about something specific like this, if they make a community whose sole purpose is to violate the privacy of someone (like "the fappening" did), it's a bit more complicated than that.

Fuck man.

http://i.imgur.com/76tVwoI.gif

Reddit got popular enough to get out of control and then it'll go down just like Digg did. I've stopped using it because of the Zoe Quinn mass ban a few weeks ago and I only go back for 2 or 3 smaller subreddits with unique content.

Anyway its quality is going down really quickly, it's part of the reason why people are leaving now, it happens to every website that gets too popular. If it's not enforced hard enough people don't know what they're doing but they try to become part of the community anyway without learning it so they just start posting stuff that's really bad and they overuse the site's memes just like Facebook does with rage faces. This ruins every joke created on the website and it just doesn't have a personality anymore.

Meh who cares, I stopped using it a long time ago.

I've been hearing about this all over twitter, reddit is in trouble.