Thank you

Your email has been added to our waiting list and we will send an invite to you as soon as possible. Thank you for your patience.

In the meantime, if you happen to run a blog, our newly launched Blog Enhancement Suite can utilize the immense power of community to help you get more audience, engagement, content, and revenue with your own embeddable community! It will breathe new life into your blog and can automate many of the tedious tasks that come with the territory, so you can focus more on what matters most... writing.

Help spread the word about Snapzu:

Let others know about Snapzu by tweeting about us. We appreciate every mention!

Tweet it!

Join the Discussion

Maternitus already posted this

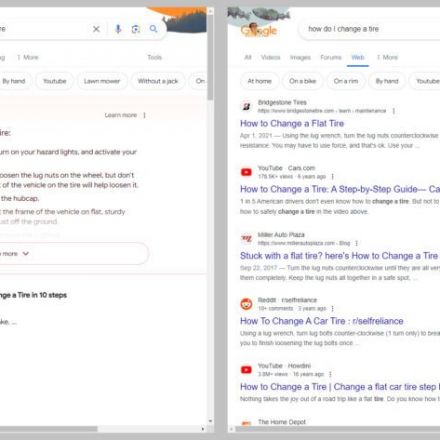

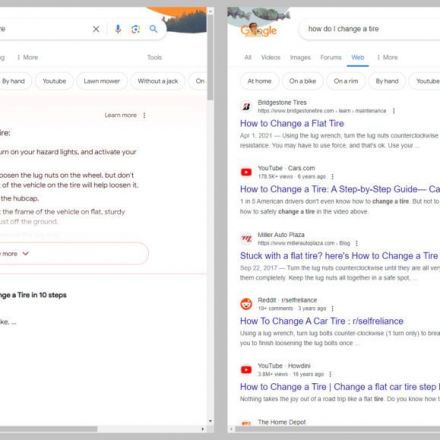

The article is written from the user's point of view, which is actually a good tip to minimize the AI-garbage in your search results. What I have done was on the other side of the internet, i.e. hosting and designing a website. I've encountered really terrible webpractices considering SEO (Search Engine Optimizing): the biggest part of SEO is Google focussed and has no real common optimizing, for all search engines, I mean. Except for allowing or disallowing the respective search engines, there's just one rule: yes or no. That's it.

To disallow for AI search bots, I had to enter other rules in the robots.txt, still just allowing or disallowing the respective bots. But here it comes: neither set of rules have to be obeyed by the bots. It is a voluntarily accepted set, both for AI and regular search. So, on the design and code end of things it's rather crap.

These practices should be on the top-priority list of W3C, because it is a destructive way of controlling the internet, from both sides, users and designers likewise. Of course I want my site to be on top of your searches, but only if you are searching for the content that's on my projects. And the user wants just the results, the content he/she was asking for. It should be easier for users to choose an engine and it should be easier for designers to choose engines and that the companies offering those engines also obey said rules on serverside of the websites.