1 year ago

2

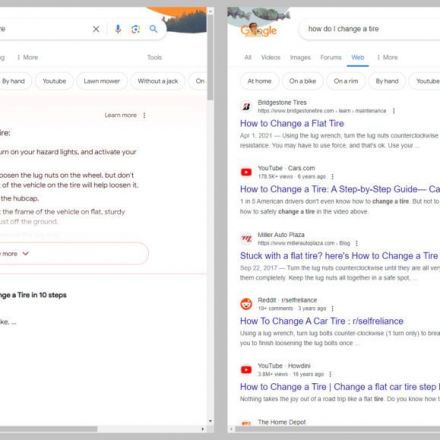

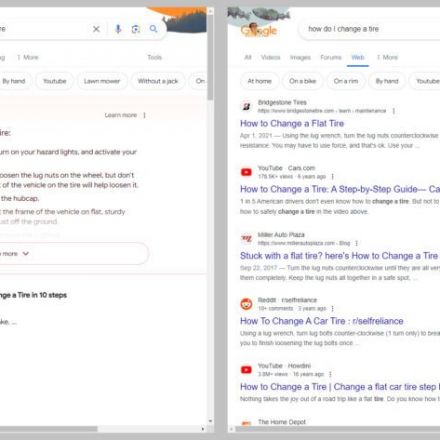

Google Search adds a “web” filter, because it is no longer focused on web results

Google Search now has an option to search the "web," which is not the default anymore.

Continue Reading

Join the Discussion

Is it smart to adjust the robots.txt to exclude everything Google from my brand new website?

While reading the article, I started to think that, when this kind of search takes over, it could be the downfall of the regular internet. Which, on its' own, already is wrecked by moguls like Google, Meta, X, Microsoft and the rest of those assholes. A search tool is very handy, but that's only when there are regular websites left to search. In a way I see some impersonal, inhumane perhaps, internet if we let those profit-before-anything-else-bitches do their biddings.

Decentralising is a good answer for it, which is actually the "old" internet in a new form. Another would be an adjusted robot.txt which refuses scraping by those companies and especially their AI's. But how would the content of such txt-file look like? Anyone here with good ideas? (I suspect the answer will be crickets.)

I think this is a solution.

Edit: Uploaded it. :-)