Superintelligence: fears, promises, and potentials

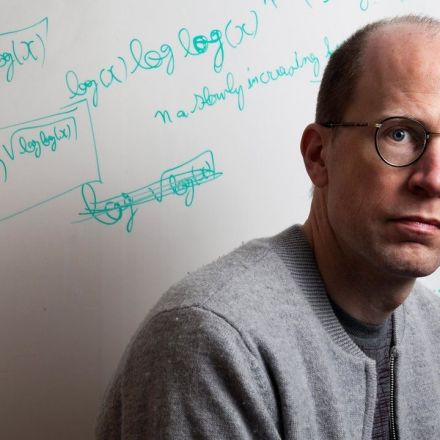

Oxford philosopher Nick Bostrom, in his recent and celebrated book Superintelligence: Paths, Dangers, Strategies, argues that advanced AI poses a potentially major existential risk to humanity, and that advanced AI development should be heavily regulated and perhaps even restricted to a small set of government-approved researchers. Bostrom’s ideas and arguments are reviewed and explored in detail, and compared with the thinking of three other current thinkers on the nature and implications...

Continue Reading

Join the Discussion