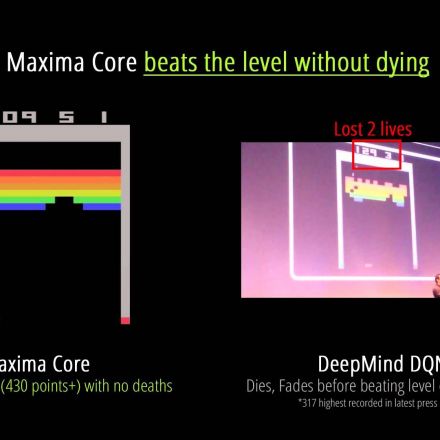

Maxima Core Atari Demo

Maxima Inc., a Michigan based AI startup, has released a video demonstration of its artificial intelligence software that learns to perform multiple tasks, within a variety of unrelated domains, without any modification to the fundamentals of its underlying system.

-

In the video, there are three unique demos included. However, when it comes to artificial intelligence, Maxima feels that many current demonstrations are missing the point. “There are plenty of AI startups doing very impressive things right now” says Bobby Lafever, Maxima’s founder, “but none of them have ever demonstrated generality. What we’ve created is a singular system that can learn to perform many tasks”.

Maxima chose to reveal all of its demonstrations at once in an attempt to show proof of concept with regard to generality; a single system which can learn and adapt to thrive within a number of different environments without the foreknowledge of what those environments might be.

The video organizes the demonstrations by difficulty:

The first, rather simplistic, test requires the Maxima core to output a numeric value between an upper and lower bound. The core learns to do this rather quickly. This test is now a permanent part of the boot diagnostics check.

In the second demo, Maxima goes head-to-head with Google’s DeepMind, using their Atari demo as a metric for comparison. As expected, familiar games such as Breakout, Beamrider and Space Invaders are present. Ms. PacMan is also shown playing through the first level, a game that the MIT Technology Review says “illustrates [DeepMind]’s greatest limitation” due to its “[inability] to make plans even a few seconds ahead”. In Q-Bert, the Maxima core discovers and exploits a known bug which allows it to score infinite points by simply jumping off of the highest block when timed perfectly.

In the last demo, Maxima experiments with speech recognition. The Maxima core is trained to learn the text of a few unique words, then using a set of training data, it learns to associate those text objects with spoken input audio. In the demonstration, new test wave files are manually fed into the core, and it is able to output text which accurately corresponds to the spoken words in the audio input.

In regards to how and why the technology works, it should be noted that the Maxima core is only partially completed per its original specifications, and its creators expect radical improvements before the next press release. One thing that is mentioned is that the current system can run on a Raspberry Pi B+. Follow Maxima’s progress at http://www.maxima.com

Join the Discussion