Solving multi-core Python

tl;dr Let's exploit multiple cores by fixing up subinterpreters, exposing them in Python, and adding a mechanism to safely share objects between them.

Continue Reading-

From: Eric Snow <ericsnowcurrently-Re5JQEeQqe8AvxtiuMwx3w-AT-public.gmane.org>

To: python-ideas <python-ideas-+ZN9ApsXKcEdnm+yROfE0A-AT-public.gmane.org>

Subject: solving multi-core Python

Date: Sat, 20 Jun 2015 15:42:33 -0600

Message-ID: CALFfu7Cpv7mTfG7GcV0BuVKVMbuWQg5J6=4a3Nawd3QCkRDfbw@mail.gmail.com

Archive-link: Article, Thread

tl;dr Let's exploit multiple cores by fixing up subinterpreters, exposing them in Python, and adding a mechanism to safely share objects between them.

This proposal is meant to be a shot over the bow, so to speak. I plan on putting together a more complete PEP some time in the future, with content that is more refined along with references to the appropriate online resources.

Feedback appreciated! Offers to help even more so! :)

-eric

--------

Python's multi-core story is murky at best. Not only can we be more clear on the matter, we can improve Python's support. The result of any effort must make multi-core (i.e. parallelism) support in Python obvious, unmistakable, and undeniable (and keep it Pythonic).

Currently we have several concurrency models represented via threading, multiprocessing, asyncio, concurrent.futures (plus others in the cheeseshop). However, in CPython the GIL means that we don't have parallelism, except through multiprocessing which requires trade-offs. (See Dave Beazley's talk at PyCon US 2015.)

This is a situation I'd like us to solve once and for all for a couple of reasons. Firstly, it is a technical roadblock for some Python developers, though I don't see that as a huge factor. Regardless, secondly, it is especially a turnoff to folks looking into Python and ultimately a PR issue. The solution boils down to natively supporting multiple cores in Python code.

This is not a new topic. For a long time many have clamored for death to the GIL. Several attempts have been made over the years and failed to do it without sacrificing single-threaded performance. Furthermore, removing the GIL is perhaps an obvious solution but not the only one. Others include Trent Nelson's PyParallels, STM, and other Python implementations..

Proposal

In some personal correspondence Nick Coghlan, he summarized my preferred approach as "the data storage separation of multiprocessing, with the low message passing overhead of threading".

For Python 3.6:

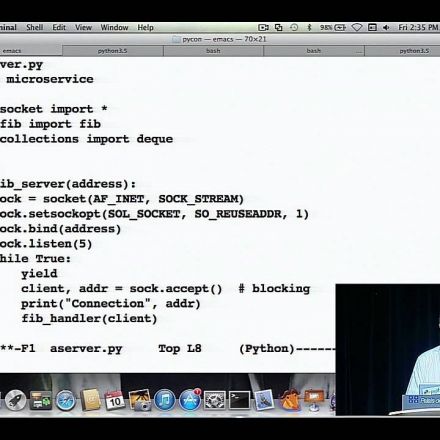

- expose subinterpreters to Python in a new stdlib module: "subinterpreters"

- add a new SubinterpreterExecutor to concurrent.futures

- add a queue.Queue-like type that will be used to explicitly share objects between subinterpreters

This is less simple than it might sound, but presents what I consider the best option for getting a meaningful improvement into Python 3.6.

Also, I'm not convinced that the word "subinterpreter" properly conveys the intent, for which subinterpreters is only part of the picture. So I'm open to a better name.

Influences

Note that I'm drawing quite a bit of inspiration from elsewhere. The idea of using subinterpreters to get this (more) efficient isolated execution is not my own (I heard it from Nick). I have also spent quite a bit of time and effort researching for this proposal. As part of that, a number of people have provided invaluable insight and encouragement as I've prepared, including Guido, Nick, Brett Cannon, Barry Warsaw, and Larry Hastings.

Additionally, Hoare's "Communicating Sequential Processes" (CSP) has been a big influence on this proposal. FYI, CSP is also the inspiration for Go's concurrency model (e.g. goroutines, channels, select). Dr. Sarah Mount, who has expertise in this area, has been kind enough to agree to collaborate and even co-author the PEP that I hope comes out of this proposal.

My interest in this improvement has been building for several years. Recent events, including this year's language summit, have driven me to push for something concrete in Python 3.6.

The subinterpreter Module

The subinterpreters module would look something like this (a la threading/multiprocessing):

settrace() setprofile() stack_size() active_count() enumerate() get_ident() current_subinterpreter() Subinterpreter(...) id is_alive() running() -> Task or None run(...) -> Task # wrapper around PyRun_*, auto-calls Task.start() destroy() Task(...) # analogous to a CSP process id exception() # other stuff? # for compatibility with threading.Thread: name ident is_alive() start() run() join() Channel(...) # shared by passing as an arg to the subinterpreter-running func # this API is a bit uncooked still... pop() push() poison() # maybe select() # maybeNote that Channel objects will necessarily be shared in common between subinterpreters (where bound). This sharing will happen when the one or more of the paramete

Join the Discussion